This will be an evolving post as I document/note the installation process and some configuration and testing.

I’m installing VMware vSphere 6.5 under my current virtualization platform to give it a spin. I’m most curious about the web interface, now that it has moved exclusively in that direction. I *HOPE* it is much better than my current vSphere 5.5 U1 deployment.

9:39PM Installation

So far, installation is going well. As a simple test setup, I created a virtual machine on my current vSphere 5.5 system with 20GB HDD, 4vCPU (1 socket, 4 core), and 4GB RAM.

The only alert I’ve received at this point is compatibility for the host CPU – probably because of nesting?

9:53PM Installation Completed

Looks like things went well, so far. Time to reboot and check it out.

9:58PM Post Install

Sweet, at least it booted. Time to hit the web interface.

Login screen at the web interface looks similar to 5.5.

The web console is night and day performance difference over vSphere 5.5. I’m totally liking this!

10:30PM vFRC (vSphere Flash Read Cache)

I just realized, after 10 minutes of searching through the new interface, that I cannot configure vFRC in the webconsole of the host. I need to do this with vCenter Server -or- through the command line. So, off to the command line I go.

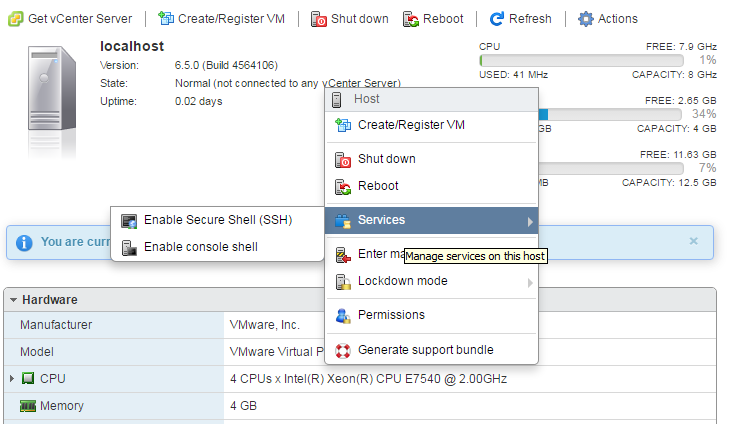

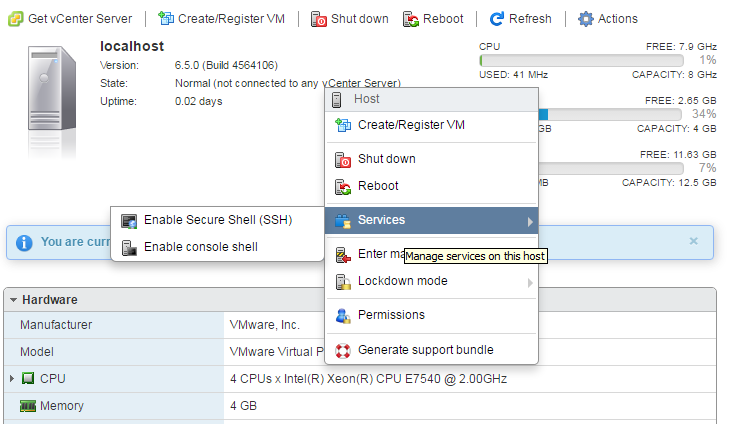

First, I enabled SSH on the host which is easy enough by right-clicking and choosing Services > Enable Secure Shell (SSH).

After SSH was enabled, I logged in. Not knowing anything much about what commands were available, I gave it a shot with esxcli storage just to see what I could see. I saw vflash. Cool, haha.

Next, I dig into that with esxcli storage vflash and see what I have available. Sweet mother, I have cache, module and device namespaces. Ok, I went further and chose device. So the rabbit hole stops here, but I had no idea of what available {cmd}‘s I had were. A quick thing I remember from some time ago combined with grep gets me what I want. Alright, alright, alright!

Knowing I have zero SSD SATA/SAS/PCIe connected, I did the inevitable. I checked to see what SSD disks were attached to my hypervisor. Can you guess, like myself, that the answer is zero? VMware doesn’t even care about responding with “You don’t have any SSD disks attached.” Just an empty response. I’m cool with that.

So this is where I’ll leave it for now. I’ll attach an SSD disk and continue this article soon.